Image Caption Generator Using Deep Learning

Automatic Image Caption Generator

Overview of Deep Learning:

Deep learning and Machine learning are the most progressive technologies in this era. Artificial intelligence is now compared with the human mind and in some field AI doing a great job then humans. Day by day there is new research in this field. This field is increasing very fast because now we have sufficient computational power for doing this task.

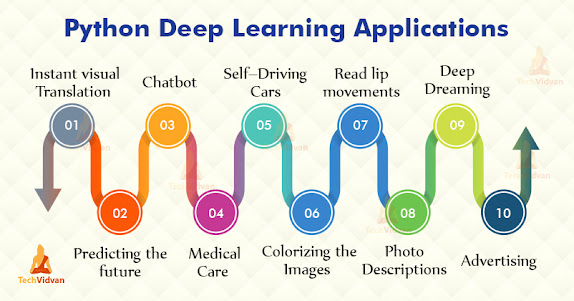

There Are some very interesting deep learning applications shown below.

Now We will be going to see one of its applications which is Photo descriptions or image captions generator.

Image Captions Generator :

Image Caption Generator or Photo Descriptions is one of the Applications of Deep Learning. In Which we have to pass the image to the model and the model does some processing and generating captions or descriptions as per its training. This prediction is sometimes not that much accurate and generates some meaningless sentences. We need very high computational power and a very huge dataset for better results. Now we will see some information about the dataset and directly jump to the architecture of the neural network of the Image captions generator.

Pre-requisites :

This project requires good knowledge of Deep learning, Python, working on Jupyter notebooks, Keras library, Numpy, and Natural language Processing

Make sure you have installed all the following necessary libraries:

- Tensorflow

- Keras

- Pandas

- NumPy

- nltk ( Natural language tool kit)

- Jupyter- IDE

Dataset :

In this project, we are using the flicker 30k dataset. In which it has 30,000 images with image id and a particular id has 5 captions generated.

Here is the link to the dataset so that you can also download that dataset.

Flicker_30k:-Kaggle Flicker 30K dataset

One of the Image in Dataset with ID 1000092795

1000092795.jpg

Here are the particular captions for these images which is present in the dataset.

The Architecture of Network :

1 .Image Features Detection :

For image Detecting, we are using a pre-trained model which is VGG16. VGG16 is already installed in the Keras library.VGG 16 was proposed by Karen Simonyan and Andrew Zisserman of the Visual Geometry Group Lab of Oxford University in 2014 in the paper VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE-SCALE IMAGE RECOGNITION.

This model won the ILSVRC challenge in 2014.

Here is the model representation in 3-D and in 2-D.

Source:-VGG16 | CNN Model GFG

Long Short Term Memory networks – usually just called “LSTMs” – are a special kind of RNN, capable of learning long-term dependencies. They were introduced by Hochreiter & Schmidhuber (1997) and were refined and popularized by many people in the following work. They work tremendously well on a large variety of problems and are now widely used.

LSTM Architecture

Source:-LSTM Networks

Read More:-LSTM Networks Full explained

Now we are combining this model architecture in one model and that is our final model which will generate caption from images.

Main Model Architecture:

So here we have VGG16 as

CNN, LSTM layer. VGG16 is trying to find image features and we are generating

Output seq for next word generation using that particular image captions which

are already available with the dataset. After that, we feed the Decoder layer

with X variable as image features and image captions and Y variable as output seq. So that how models

learn both 1) try to find image features and 2) generate text word by word.

That’s how we can generate captions from images.

Model Summary :

Github: Image-Caption-Generator

Conclusion :

So that how we can create an Image caption Model using LSTM and VGG16 model on Flicker 30k dataset. You learned about VGG16 and LSTM Model You can also use some other dataset like flicker 8k , COCO Dataset and many more...

Comments

Post a Comment